Detecting AI audio and voice scam: your guide to spotting deepfake voices and AI audio scams in 2025

June 2025, someone creates a fake Signal account with the name "[email protected]" and starts dropping AI voicemail to government officials. These messages perfectly mimic the U.S. Secretary of State Marco Rubio's voice and writing style.

This wasn't some prank call for laughs. U.S. authorities believe the goal was to manipulate powerful government officials to get sensitive information or account access.

Fake voices are hard to detect by ear, but they aren't invisible. This guide will show you how to detect cloned voice patterns, identify AI voicemail, odd celebrity ad and political misinformation, and protect yourself with an AI voice checker and fake voice detector tools.

The growing threat of AI voice clones and deepfake audio

Voice cloning has hit a frightening level of realism. Experts say deepfake voices have crossed the "uncanny valley", meaning our ears can't tell the difference between real and AI-generated voices anymore. That's why speech deepfake detection technology has become essential for everyone, not just tech experts.

The numbers:

- Americans reported 845,806 imposter scams in 2024, with nearly $2.95 billion in losses. That's billion, with a B!

- 354% increase in enterprise deepfake attacks, with call centers losing billions due to synthetic voice fraud. Many of these could have been prevented with proper AI voice checker systems in place.

- Today's voice AI needs just 30 seconds of audio to create a convincing voice clone. Just one TikTok video, podcast clip, or YouTube speech is all scammers need to make a flawless copycat. The ability to detect cloned voice patterns quickly has never been more critical.

- Elderly victims are especially vulnerable. Losses by people 60+ topped $445 million, an 8x increase since 2020. (We'll tell you a bit more about grandma scams in this guide).

- Overall consumer fraud losses reached $12.5 billion in 2024, a 25% increase from 2023.

This whole realm of synthetic voice fraud is trending under the label "vishing"

What is vishing and why deepfake vishing is especially dangerous

Vishing, aka voice phishing, is the art of scam via phone and voicemail from fake authority figures like bank employees, government officials, or tech support. They use emotion, urgency, and trust to pressure people into sharing info or sending money.

Now add AI to the mix. Deepfake vishing means the scammer is calling his victim with a cloned voice of the victim's mom, friend, CEO, or colleague. Without a reliable fake voice detector, these scams are nearly impossible to catch in real-time. This tactic is harder to detect and way more successful than traditional scams.

Common AI voice scams and deepfake vishing scenarios

CEO fraud

Scammers fake the boss's voice to push urgent money transfers. In the UAE, scammers fooled a bank manager with a cloned executive's voice to send them $35 million.

Government impersonation fraud calls

Scammers posing as police, tax agents, or immigration threaten fake fines or criminal charges. The FBI logged 17,000+ complaints in 2024, with losses topping $405 million.

In early 2024, New Hampshire voters got calls featuring a cloned President Joe Biden, telling them not to vote in the state's primary. The FCC later ruled such uses of AI-generated voices in robocalls illegal. An AI voice checker could have helped voters identify this manipulation.

Emergency scams (aka Grandmother scams 2.0)

Scammers clone the voices of children or grandkids, calling relatives in fake emergencies. In 2024, this led to 357 complaints and about $2.7 million in losses.

Philadelphia attorney Gary Schildhorn got a panicked call from his "son," claiming he'd been in an accident with a pregnant woman and was now in jail. The voice was so real, the emotion, the tone, everything, he nearly fell for it.

Tech/customer support scams

Scammers pose as customer service reps, convincing victims to hand over access or cash. In 2024 alone there were 36,002 reported cases, totaling $1.46 billion in losses. Businesses are increasingly training staff to detect cloned voice attempts in customer service interactions.

Romance scams

Fake lovers, celebrity imposters, scammers lure victims with synthetic voice calls and videos. One heartbreaking case, scammers impersonated actor Steve Burton, sending a woman AI-generated voice note, love messages, photos, and videos until she handed over her entire life savings. We covered this story in detail in our recent article. Read the full Steve Burton case.

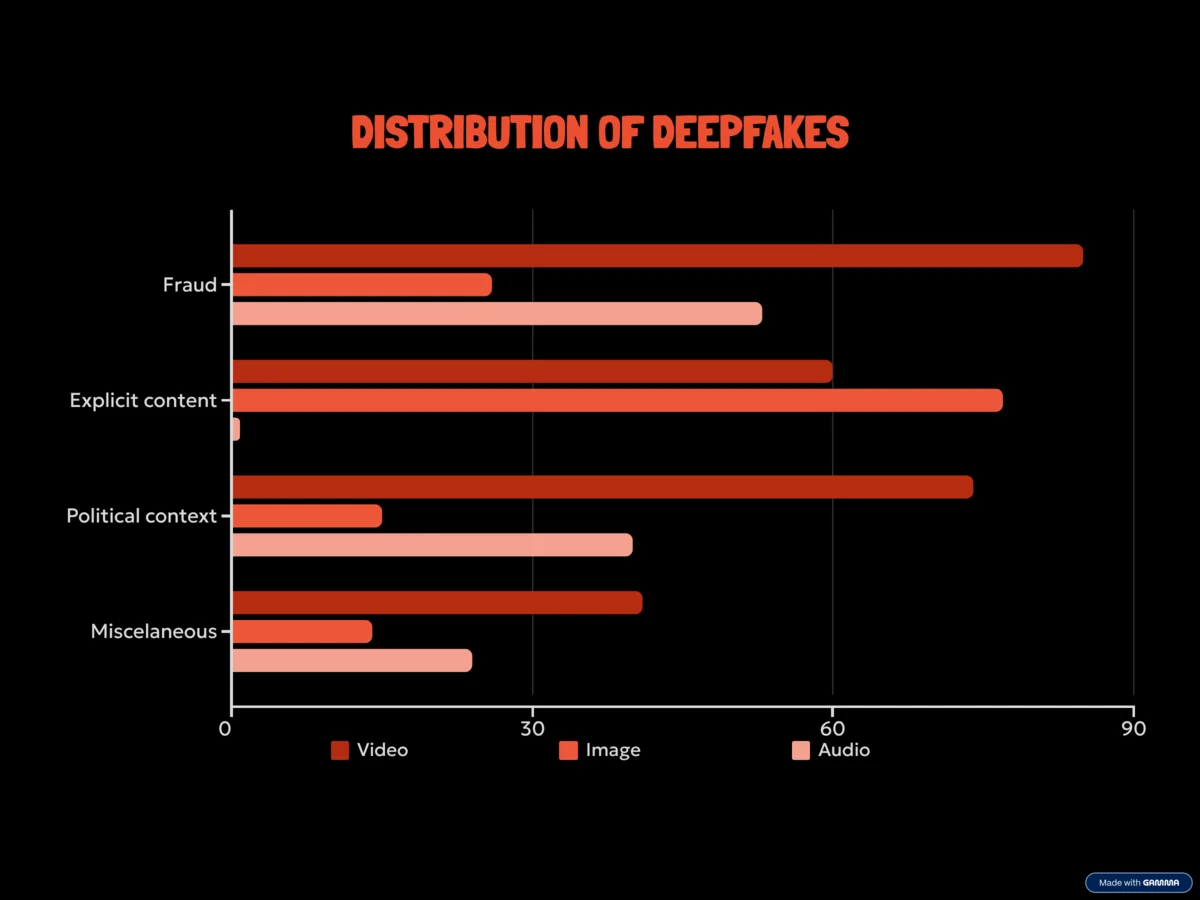

As you can see, scammers don't just stop at voices they can fake anything, a photo, a text message (the easiest one) or a video. So use an AI text detector to catch AI-written love letters, fake DMs, and business emails or too-perfect chat messages. Learn more about how major media outlets handle AI content in our BBC AI content analysis.

To stop romance scam "video calls" or fake investment ads before they fool you use an AI video detector. And to spot AI-generated photos that scammers use to build fake personas try an AI image detector.

Celebrity scams

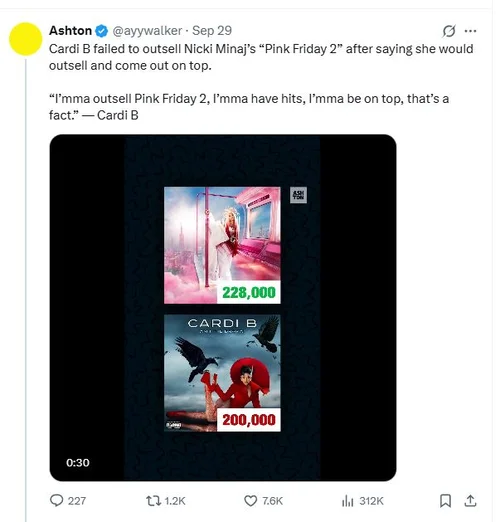

There's also a special flavor of deepfake trickery brewing on social media. Instead of full-on fake interviews or scammy phone calls, scammers (and clout chasers) sometimes just use a static image or video with a cloned celebrity voice on top. The goal isn't always money, sometimes it's pure virality.

For example a recent viral post claimed Cardi B was talking about outselling Nicki Minaj's Pink Friday 2. The audio was legit, except for one tiny segment, the part about the album. That snippet alone was AI-generated. One tweak, one deepfake audio, and the internet ate it up. Using speech deepfake detection tools helps content creators and fans verify these viral moments before they spread.

Luckily, nearly everyone can now fight back using user-friendly AI audio detectors. Let's see how you can spot, and stop deepfake voices.

How to spot AI-generated voice

Here's your checklist before you get into a panic mode. Be all ears for these red flags.

Unnatural audio elements

- Overly perfect pronunciation with no stumbles or natural "ums" (like Siri).

- Flat tone, no real emotion.

- Odd pauses or a robotic rhythm.

- No natural breathing.

- Background sounds that don't make sense for the situation.

Context clues

- Urgent, pressure-packed requests are a classic manipulation move.

- Asking for money or sensitive info.

- Calls from weird numbers or apps (esp from someone close).

- "Sorry, I can't do video right now" excuses.

- Missing personal details only the real person would know.

But, and it's a big but, even trained listeners get fooled. Human ears alone aren't enough anymore, so it's time to call in AI audio detectors. Professional speech deepfake detection tools analyze audio patterns that are invisible to the human ear, making them essential for anyone who wants to detect cloned voice attempts accurately.

What is an AI audio detector and how does it work?

AI audio detection is the technology that helps us figure out whether a voice is human or an AI-cloned deepfake. AI audio detectors like isFake.ai analyze audio files using algorithms looking for micro-patterns no human can hear. You upload a file, wait 10–30 seconds, and get a confidence score.

The system has been trained on huge datasets containing examples of both human voices and AI-generated speech. It allows it to learn what features are typical of each. So, when it gets a new audio file system compares it to thousands of examples of both human and AI voices to judge authenticity.

AI voice checker technology examines multiple audio characteristics simultaneously: spectral analysis, prosody patterns, breathing signatures, and micro-fluctuations in pitch and tone. This multi-layered approach is what makes fake voice detector tools so effective at catching even the most sophisticated deepfakes.

And it's not just for cybersecurity pros anymore. In 2025, knowing how to use an AI voice detector is as essential as spotting phishing emails was 10 years ago.

Who should use it?

- Individuals. Keep scammers away from your family and property. Regular use of a fake voice detector can prevent emergency scams and protect elderly relatives.

- Content creators and journalists. Authenticate interviews and protect your voice. Speech deepfake detection ensures your published content is genuine.

- Businesses. Stop CEO fraud and vendor scams. Companies integrate AI voice checker systems into their verification protocols.

- Governments. Guard against impersonation of politicians, diplomats, and officials. The ability to detect cloned voice attempts protects democratic processes.

Why isFake.ai for speech deepfake detection?

isFake.ai is a reliable AI voice checker and fake voice detector. Here's why:

- 95% accuracy in identifying AI-generated audio across all major voice cloning platforms. Our speech deepfake detection algorithms are constantly updated to catch the latest AI voice models.

- 10-30 second analysis for instant results when you need them most to detect cloned voice patterns in a saved recording.

- Multi-format support. Upload MP3, AAC, WAV, and other common audio formats without conversion hassles.

- Visual confidence indicators highlight suspicious audio fragments, making it easy to understand results even for non-technical users. Our AI voice checker shows exactly which parts of the audio are synthetic.

- No registration required for quick checks, perfect for urgent verification needs. Use our fake voice detector immediately without creating an account.

- Multimodal detection. Check text, images, video, and audio, all in one place. Scammers often use multiple fake content types, detect them all with one tool.

- Privacy-first. Your audio files are analyzed securely and not stored on servers. We understand the sensitive nature of the content you're verifying.

isFake.ai gives you the tools for reliable speech deepfake detection and the confidence to detect cloned voice patterns instantly.

How to protect yourself from deepfake voice scams

Winning the deepfake battle takes awareness and smarter habits. Here's your anti-scam action plan:

- If a "family member" or "bank" calls with a weird urgent request, hang up and call back on a verified number.

- Use two-factor authentication (2FA) so your data can't be stolen to fuel scams.

- Limit public audio/video. Less content online = harder to clone your voice. Every podcast appearance or social media video gives scammers more material to detect cloned voice patterns from.

- Create secret family or business code words for emergencies.

- Don't click shady links. Ever.

- Businesses should educate teams on spotting deepfakes and using AI detectors.

- Implement callback verification or face-to-face checks for big transactions or sensitive info.

- Keep an AI voice checker bookmarked on your devices for instant access when you receive suspicious voicemails.

Don't let deepfake voices fool you, stay one step ahead.

With a mix of awareness, smart security habits, and AI detectors like isFake.ai, you can keep your family, business, and identity safe.

Detect AI audio in seconds with isFake.ai

- Upload audio in all common formats: MP3, ACC, WAV

- Click "Check"

- Get fast, accurate results (10-30 seconds)

- Read the result. Here's the breakdown on how to read the results of all our AI detectors.

Share with your family, colleagues, friends, oh and followers, too. Help others detect cloned voice scams before they become victims.