Why ‘Undetectable AI writing’ doesn’t exist: the myth, risks & safer alternatives

AI writing tools are powerful and tempting to use. But the idea that you can make AI-generated text totally invisible to detectors is not more than a myth. Whether it’s students worried about plagiarism filters or content creators trying to dodge detection, they all look for a way to slip past AI checks…. It seems appealing: shuffle words, swap in synonyms, or run text through an ‘AI humanizer’ an, here it goes, ✨undetectable content✨

But, in fact, detectors are continually improving, adding new signals and checks. Each time a trick surfaces, systems adapt. In short, playing cat-and-mouse with detectors is a losing game.

The myth of ‘undetectable AI writing’

Since the official ‘drop’ of AI in 2021, using ChatGPT or other chatbots for creating text materials has become appealing… attractive… normal… But no one wants AI-generated content to get over the world, so detectors are here. But everyone wants their AI-written text to look real, so humanizers are also here! Seems like an endless circle, where students are constantly trying to slip an AI-generated essay past Turnitin, and teachers are trying to catch them.

Bypassing detection can feel tempting because it’s a shortcut. Just pretend the AI text is original, and avoid the extra work of heavy editing. But these tactics fail long-term for a few reasons. First, today’s detectors use many different signals (not just one formula), so a tweak that fools one tool might fail another. Second, writing that’s prompted to hide AI often ends up unnatural or inconsistent. Detectors learn new tricks every year (and even faster), so every ‘undetectable’ hack soon becomes detectable. In the end, it’s easier and safer to focus on writing well than chasing a fleeting cheat.

What AI detectors actually look for?

AI detectors don’t ‘read’ text for meaning like a human. Instead, they hunt for patterns and anomalies in writing style. Broadly speaking, detection methods fall into three categories: statistical patterns, stylometric signals, and structural anomalies.

Statistical patterns (Perplexity, Burstiness, Entropy)

Detectors often use statistical metrics like perplexity and burstiness. Perplexity measures how predictable the text is. Text written by AI is usually very ‘safe’ and predictable, so its perplexity is low. Human writing tends to introduce more randomness, starting with little typos, and ending with local jokes. That’s exactly what raises the perplexity. Burstiness measures sentence variation: humans naturally mix short, punchy lines with longer, complex sentences. AI tends to write more uniformly. A string of 20-word sentences with Subject-Verb-Object patterns looks suspiciously ‘AI-ish’, detector sees low burstiness and, boom, raises a flag!

Stylometric signals (Structure, Rhythm, Semantics)

Stylometry is the study of writing style. Each writer has their own quirks like favorite words, syntax habits, or punctuation patterns. AI-generated text often (almost all the time) lacks the nuance of a real person (because it’s not one). Advanced detectors analyze word choice frequencies, sentence structure, grammar style, and even the semantics of the writing. Also, it looks at rhythm and flow: do ideas leap in a jerky, unrealistic way? Is the vocabulary oddly consistent? If something smells ‘too perfect’ or robotic, detectors notice. And flag.

Behavioral & structural anomalies

Beyond style, detectors also scan the text for odd anomalies (like with their own PKE Meter). Some long phrases might exactly match the text from the model training data sets. Or use bogus slang or forced idioms that don’t fit context. Modern detectors also cross-check coherence and factual consistency: if a sentence suddenly shifts tense or injects an off-topic exclamation, it raises eyebrows.

isFake.ai analyzes text, images, audio and video using statistical signals, stylometric markers and anomaly detection. But it doesn’t give a binary verdict. It shows probabilities and highlights why certain passages look synthetic.

Why you can’t reliably bypass AI detection

Every year, people roll out clever hacks: swap in synonyms (so ‘outstanding’ becomes ‘perfect’), shuffle clauses, insert casual slang, sprinkle in emojis, or run output through a ‘humanizer’ app. Indeed, it can work temporarily. But there are some… drawbacks.

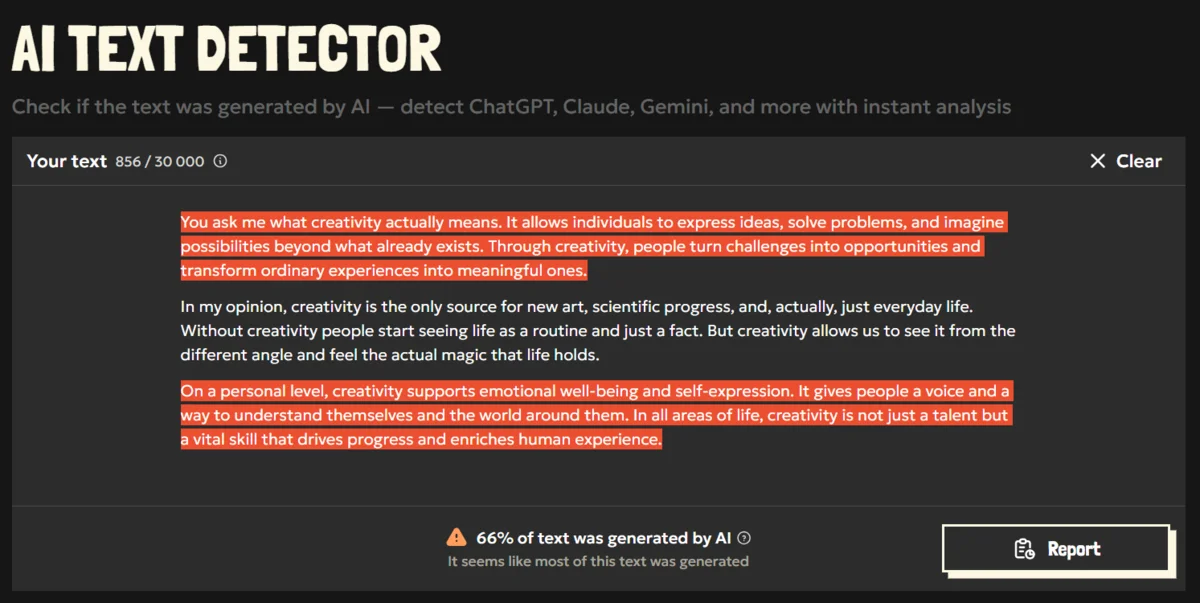

First, detector variety means no single trick wins. Each detection tool uses its own mix of signals. One model might emphasize perplexity; another might analyze sentence-level grammar. A tweak that lowers the score on one platform could still be red-flagged by another. For example, isFake AI text detector compiles all signs typical for AI generation and conducts a mini-research every time you press the ‘Check’ button. The most suspicious parts get flagged.

Second, these common hacks often break the writing. Randomly swapping synonyms can jarringly change tone or introduce inconsistencies. Plus, if you’re editing dozens of paragraphs, manually scrambling them is tedious and error-prone. Humanizer apps can help, as they shuffle phrasing and add slang, but detectors now flag those inconsistencies and forced idioms as well.

Finally, bypassing isn’t timeless. Detectors keep learning. A method that gave you a ‘clean’ score in January might be caught by an update in February. When GenAI and humanizers are speeding, detectors almost immediately catch up.

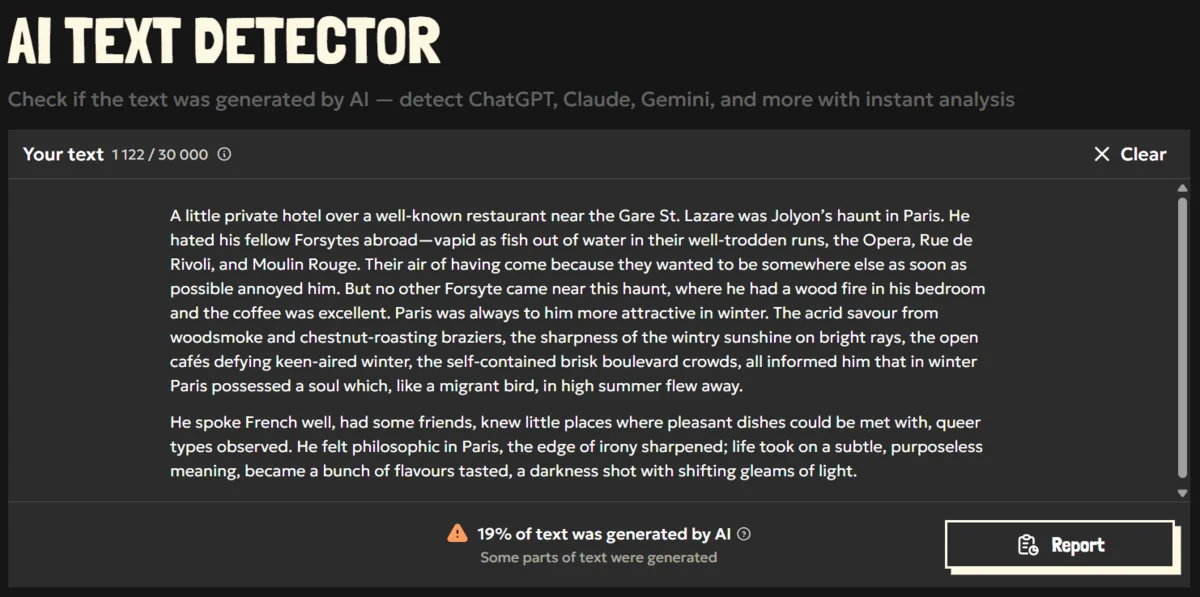

False positives: when human writing gets flagged

No student wants to spend a couple of hours writing an essay only to be left wondering ‘Why does my text get flagged as AI???’

Sometimes actually good human writing can look suspicious. If your style is too polished or uniform, detectors might falsely tag it. For example, a professional report that ‘tightens’ every sentence to 15 words, removes contractions, and follows a rigid template can mimic an AI’s statistical profile. In this case the detector might see very low burstiness (every sentence about the same length) and steady perplexity (all vocabulary from formal guidebooks). In effect, it mistakenly reads your well-edited work as ‘robotic’ and false positive AI detection happens.

To avoid this and win in a human vs. AI text battle, you can introduce deliberate variety.

- Vary your sentence lengths and their grammatical structure: mix short and long, active and passive.

- Add personal opinions (if possible) and real-life stories (if acceptable).

- Toss in rhetorical questions and colloquial turns.

- Use different punctuation marks. Even an occasional em-dash can make the prose feel more organic (yes, em-dashes exist in real-life writing too…).

- In practice, try reading your text aloud. A monotone cadence might signal low burstiness.

False negatives: when AI slips through

On the flip side, we gotta admit, some AI-crafted text flies under the radar. It’s when false negative AI detection enters the stage. Cutting-edge AI models (GPT-5.2, Gemini 3 Pro, Claude Opus 4.5) are so refined that their output can closely mimic a real writer’s style. A short email or tweet can have too little data for detectors to analyze meaningfully and as a result it might simply pass as normal. Also, if an AI model is tuned on a specific author’s style of writing, it can pick up that person’s quirks. In fact, detectors calibrated on generic GPT outputs might miss a ‘customized’ style.

In practice, AI content can “slip through the cracks” for a few reasons. Short texts or content rewritten multiple times leave fewer obvious flags. Also, while older detectors might have cried wolf too often, newer models aren’t perfect either. For example, OpenAI reported its official AI-classifier only caught about 26% of AI-written text as ‘likely AI,’ absolutely missing the rest. So a clever AI or a clever human adapter can sometimes evade filters. The key point: no tool is foolproof, and a confident ‘not AI’ result can still be wrong.

That’s why isFake.ai displays its confidence score, not a Human/AI label. This makes it clear when the result is uncertain and avoids the false sense of confidence common in older detectors.

The real risk: not technical – human

Creating a 100% undetectable AI isn’t just technically hard, but also ethically risky. Notice how the real consequences are always on a human, not on AI. Students get plagiarism accusations and failed assignments when getting caught on AI usage. Journalists spread misinformation and lose credibility. One AI paper can become a turning point in every career, and there’s no guarantee that that turn is going to be good…

Content authenticity is the long-game value. No matter who they are, high school teachers or your blog followers, readers crave genuine voice and insight. And staying honest about you using AI will always be wiser than constantly trying to hide it. Especially when the detectors are getting better day by day.

There’s nothing bad about synthetic writing and using AI. But there’s no way we will ever agree with developing a myth of ‘Undetectable AI text’.

(Figure: Chart – Risk exposure. Bar graph comparing the risks of using human-only content vs. AI-assisted content, highlighting reputation and detection exposure.)

Safer alternatives to ‘Undetectable AI’

As long as isFake AI text detector exists, there’s no way to beat detectors. Focus on how to humanize AI text and make it unique instead:

-

Edit manually

AI output is not the final masterpiece, that better be your first draft. Rewrite sections in your own words. Personalize the tone. Check for consistency. Manual edits break up machine patterns and answer the question: how to make AI text more human.

-

Add personal context

Include anecdotes, examples, or facts unique to you and only you. AI usually doesn’t know your experiences (unless you feed it with it) so tossing personal details (or even jokes) in makes the text actually yours and not robotic. It also enriches the content. And can make your reader smile!

-

Break the rhythm

Vary sentence length. And structure. On purpose. Combine short and long sentences with each other. Yeah, just like we did here. Use fragments or exclamations for emphasis. Add dialogue or quotes. Yeah, just like we do here. Uneven rhythm looks natural, actually humanizes AI writing and defeats the pattern detectors expect to see in AI-generated texts.

-

Use sources

Add some relevant and up-to-date references and quotes. Firstly, it makes your text more ‘alive’ and, secondly, it builds a bond with the reader, who can reflect on those citations. Detectors can sometimes flag stale or repeated facts, so fresh sources keep your writing grounded in reality.

Want to write with confidence? isFake.ai helps you understand why your text looks synthetic and how to adjust it. Use detection as guidance – not something to ‘beat’. Let these tools be a sounding board: they point out awkward spots, but it’s up to you to refine them.