BBC AI Content Analysis: 13 Years of Articles

(2012-2025)

Ever wondered if the BBC uses AI to write their news stories? We decided to find out by checking BBC AI content from 2012 to 2025 using our AI text detector.

Artificial intelligence isn’t the future of journalism. It’s already reshaping how news gets written, edited, and delivered to your phone every morning. What started as a newsroom experiment has become essential technology that helps journalists write faster, translate content, and process mountains of information in seconds.

Yet this shift raises important questions about AI in journalism: Can readers really trust AI-polished articles? How much automation is too much when it comes to AI generated news? And most importantly, when you scroll your morning news, how do you know what's human and what's artificial?

The BBC is a good example to see these changes. As Britain's most trusted news source and a media giant that reaches millions worldwide, every decision BBC makes about AI in journalism ripples across the entire industry. Their approach doesn't just shape their own newsroom, it influences how people everywhere consume news daily.

That's why we decided to explore 13 years of BBC AI content, from 2012 to 2025, using our isFake.ai AI text detector to analyze trends, reveal insights, and share data so anyone can verify it.

What we did

We didn't just skim through a few articles and call it research. Our team analyzed thousands of BBC articles published between 2012 and 2025, focusing specifically on their Artificial Intelligence news section. Using our isFake AI text detector to get a comprehensive database showing exactly how BBC content has evolved over 13 years, creating the most detailed picture yet of AI generated news detection patterns in major journalism.

Our dataset includes everything from short news briefs to long investigations. It tracks AI probability scores, publication dates, and article lengths. We also marked big AI events like GPT-3’s launch in May 2020 and GPT-4 in March 2023. This helps us see how new tech matches up with changes in the articles.

The complete dataset lives on GitHub where anyone can download and verify our findings.

AI in journalism: How the BBC actually uses AI

The BBC announced their use of AI in journalism in February 2024. Rhodri Talfan Davies, the Director of Nations, shared three main rules for AI use: act in the public's interest, focus on talent and creativity, and be open with audiences. It means that when AI contributes to content creation, readers know about it. Senior journalists check everything AI does before it’s published to keep quality up. They don’t let AI do fact-checking or write news stories.

In 2025, the BBC launched more ambitious AI tools. Their "At a Glance" is AI-generated bullet-point summaries of lengthy news articles, particularly popular among younger audiences seeking quick content consumption.

Also BBC created BBC Style Assist, it’s the BBC-trained large language model that reformats Local Democracy Reporting Service (LDRS) content into BBC house style. It means that now they can publish more local stories without taking extra time on production. This approach to AI in journalism demonstrates how major newsrooms balance automation with editorial standards.

BBC articles and AI probability trends (2012-2025)

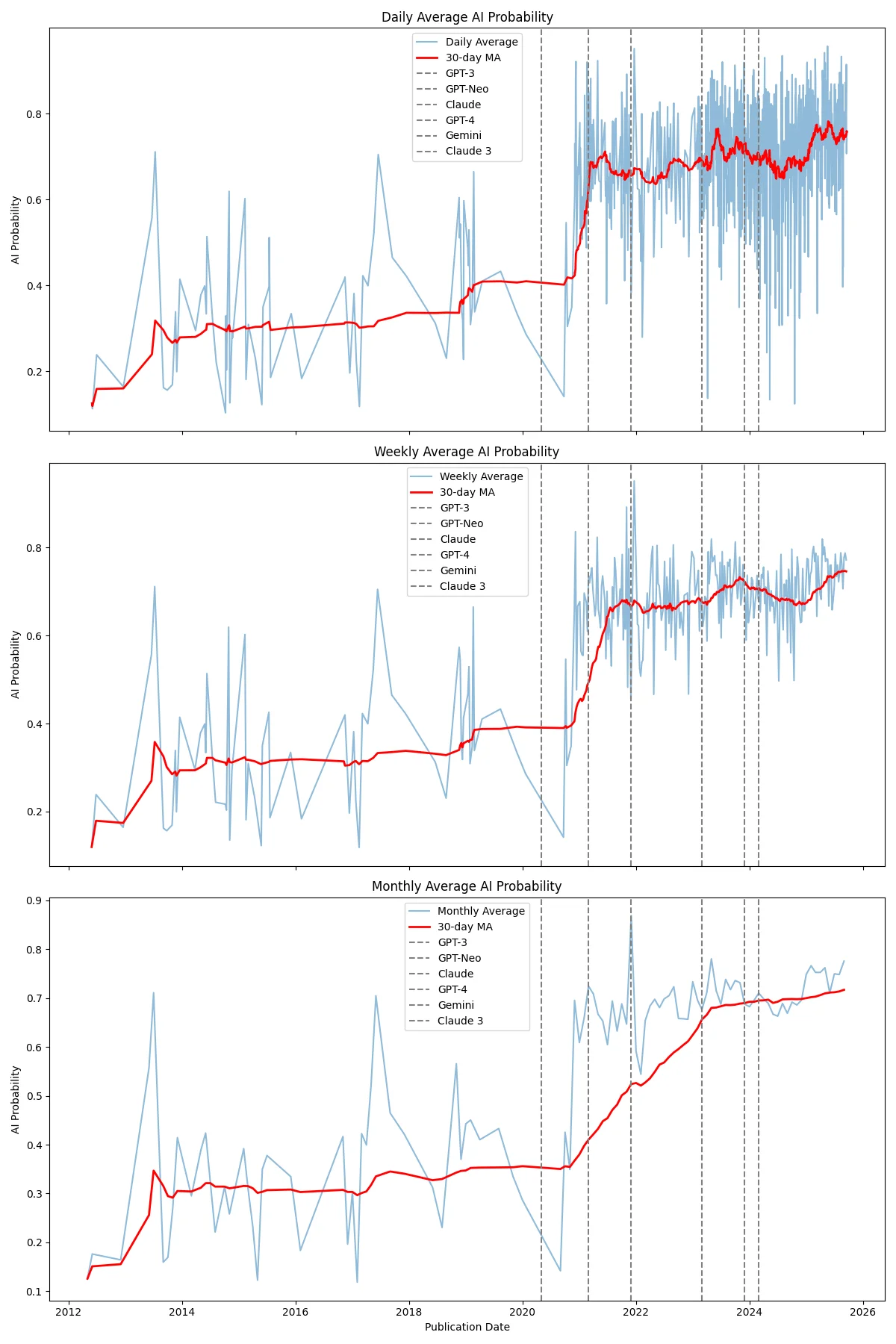

Our BBC AI content analysis using an AI content detector reveals a dramatic shift in content right when major AI tools were released:

-

2012-2020

During these eight years, our AI content detector consistently found low AI probability scores between 0.2-0.4, indicating what any journalism professor would expect: predominantly human-authored content. This was the era when AI writing tools were so primitive they couldn't fool anyone, let alone produce publishable news copy. -

Late 2020-Early 2021

AI detection scores jumped right when GPT-3 and GPT-Neo came out. During this time, some articles still looked pretty human, scoring low, but others had scores over 0.6. It shows that newsrooms were experimenting with AI in journalism as these new technologies became available. That mix shows a blend of old-style reporting and AI assistance. -

2021-2025

AI usage has stayed high over time, around 0.7 to 0.8. The red smoothing line shows a clear breakpoint around late 2020 to early 2021. After that, AIg-enerated news probability jumped to 70-80% and stayed there through 2025.

Occasional pre-2021 spikes likely represent false positives rather than actual AI usage, since these tools were rare back then.

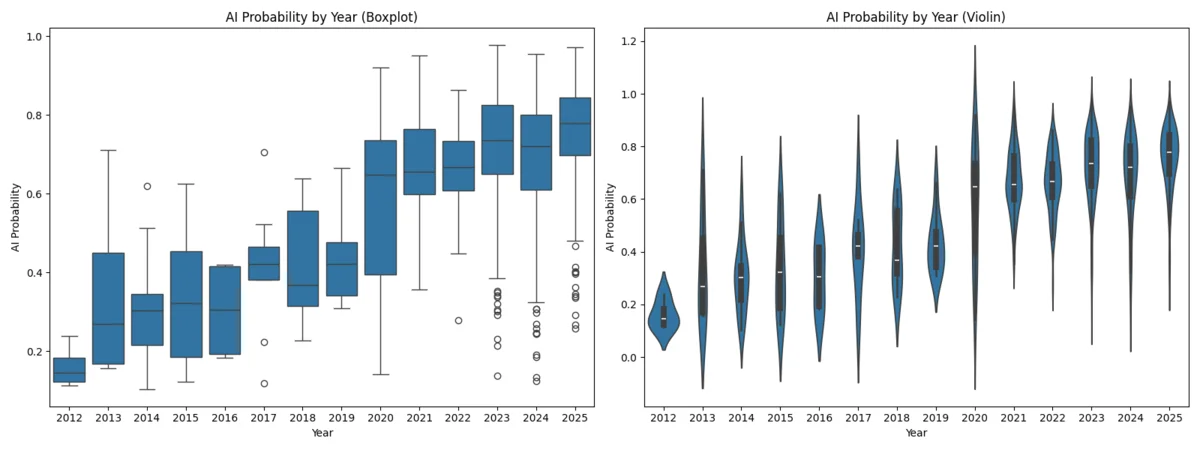

Yearly analysis: distribution patterns

The year-by-year analysis reveals clear patterns:

-

2020 transition year

The median AI probability rises sharply here, and the range of values is wide. This means there's a big mix between human and AI-generated news content during this time. -

Pre-2020 baseline

Years 2012-2019 show minimal AI detection with consistently low probability scores, matching the expectation that most writing came from humans since AI tools were rare then. -

Post-2020 convergence

After 2020, median values kept rising slowly. At the same time, the range of values got smaller. This means most content was likely AI-assisted, marking a significant shift in AI in journalism practices.

The violin plots show this beautifully. Where the violin is wider, there are more articles at that AI probability level. You can see the shift from human-written content (wide areas at low AI probability) to AI-assisted content (wide areas at high AI probability)

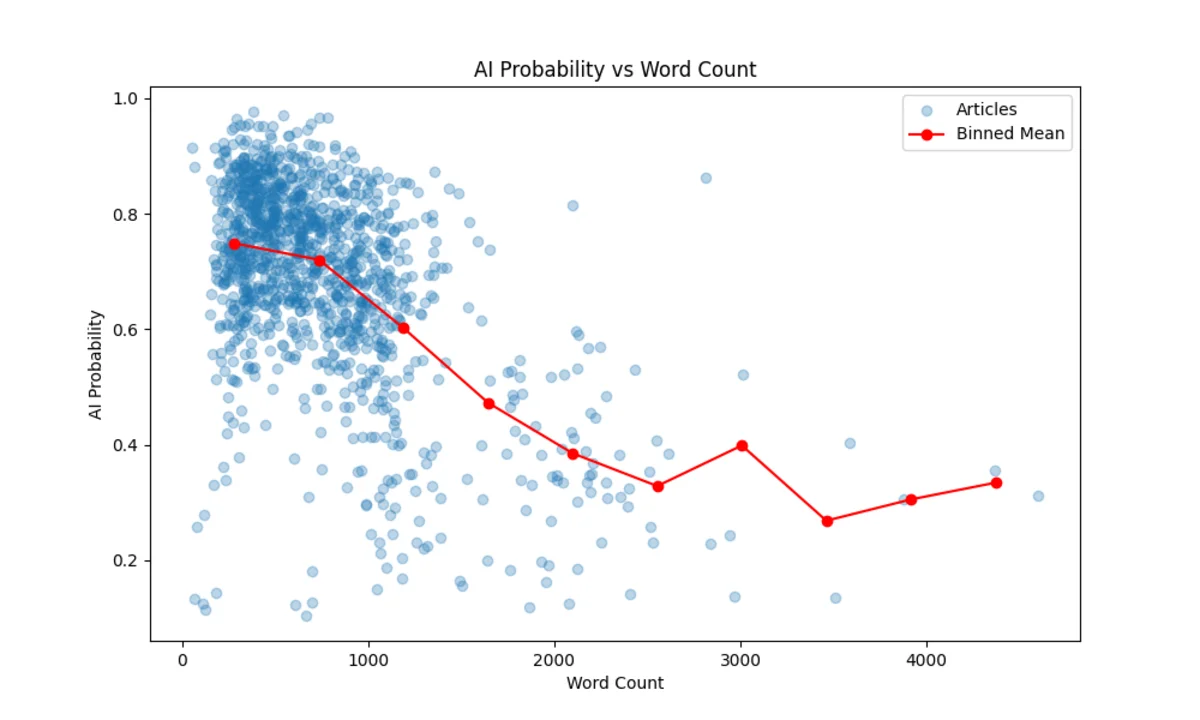

Does article length affect AI detection?

Our analysis shows a clear link* between article length and AI detection scores:

- Short news pieces and summaries tend to get flagged as AI more often

- Longer articles usually have lower AI probability scores

- In-depth reports are more often seen as written by humans

- Clear downward trend as word count increases across all time periods

This pattern aligns perfectly with how journalism actually works. Complex investigations require human expertise, checking sources, and understanding context. AI can’t do all that yet. But AI tools are good for quick summaries, breaking news, and simple updates where speed matters more than depth.

Note: To make sure our observations are reliable, we calculated correlation coefficients to verify the link between article length and AI detection scores. Both metrics confirm a statistically significant negative correlation:

- Pearson correlation: -0.5265 (p-value: 1.3838839886044077e-87)

- Spearman correlation: -0.3995 (p-value ≈ 8.941733009276863e-48)

Both values are negative because the relationship is inverse: as article length increases, the probability of being flagged as AI decreases. The extremely small p-values mean that this pattern is not random, but consistent.

Model detection patterns over time

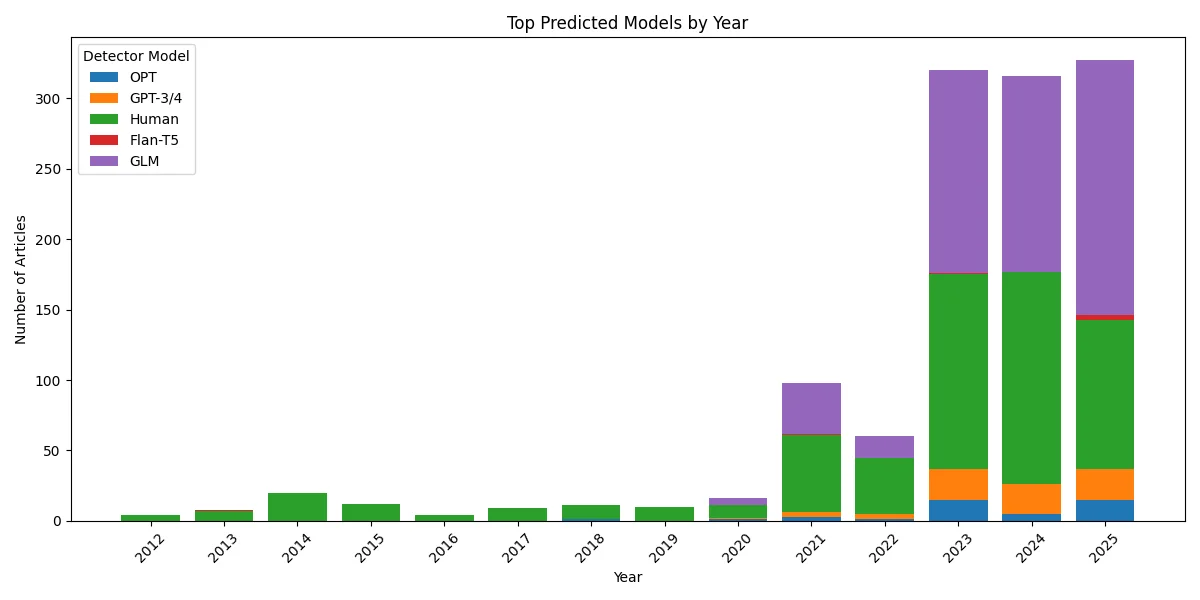

The stacked bar charts show how AI model signatures have appeared in BBC content over time.

Green shows that human-written content was more prevalent in the early years, though it is still present in recent times.

Purple (GPT-3/4): pops up mostly when AI is getting a lot of attention. It matches up well with when OpenAI drops new versions.

Orange (GLM models): Increasingly detected in recent content (2023-2025), suggesting BBC experimented with multiple AI platforms.

Blue (OPT) and Red (Flan-T5) show up throughout the analysis period.

The visualization shows that AI-generated news has grown a lot, but human-written articles are still around. So it’s more of a mixed ecosystem rather than complete automation.

Open dataset: BBC AI content analysis (CSV + GitHub)

Research findings are published as open data in CSV format. This lets other researchers conduct verification studies and build upon this analysis. We want to make discussions about AI in journalism more open.

The dataset includes analysis spanning 2012-2025, article length correlations, AI probability scores, and correlation data with major language model release dates.

Key findings

Analysis of 13 years of BBC content shows how AI use in journalism has changed. The biggest jump in AI-generated news happened in 2020-2021, when it moved from tests to regular use.

BBC sets clear standards for using AI responsibly. Their three main principles help balance tech that works well with keeping honest reporting. Other media can learn from this when adding AI to their work.

AI is now a big part of journalism. AI content detectors like isFake.ai help spot which content comes from AI and show how the industry is changing. We don’t know yet what the future of AI in journalism will look like. But this research gives important facts to help the conversation and shape policies.

Here’s what we found about how BBC’s AI content is changing:

- AI detection scores increased dramatically between 2020-2021. This lines up with big language model releases.

- Longer articles tend to have lower AI scores, which means human experts are still key for detailed reports.

- High AI detection rates stayed through 2025, showing that AI use is becoming a regular thing, not just an experiment.

- These trends show up in both full and balanced datasets, proving the changes are real.

For comprehensive media verification, explore our AI video detector and AI image detector alongside text analysis.