What is isFake.ai?

How AI detection works and why it matters

Ever scrolled through your feed and wondered: "Is this real or AI?" That's the question millions of people ask every single day. As AI-generated content floods our digital world, from deepfakes to ChatGPT essays, the line between authentic and artificial keeps blurring. That's why we decided to build our own AI detection tool isFake.ai.

And nooo, we aren't just another AI detector. We're a team of researchers and devs obsessed with understanding how AI works, training our own models on massive datasets, and building the most transparent (well, and the only fun) detection system out there. Actually we wanna be a Google of truth. When you need to know what's real and what's not, we'll tell you.

What is isFake.ai?

isFake.ai is an all-in-one AI detection tool that scans text, images, audio, video, and code to identify synthetic content. Other tools work with one form of content like essays or photos, our AI detection platform works across every format your content might take.

4 AI detectors in one place, let's count them:

- If you want to verify if that LinkedIn post was written by ChatGPT, use our AI text detector.

- If you are suspicious about that viral celebrity video, run it through our AI video detector.

- If you think someone cloned a voice in that audio clip, check it with our AI audio detector.

- And if you grabbed an image that looks too perfect, our AI image detection tool will tell you if it's fake.

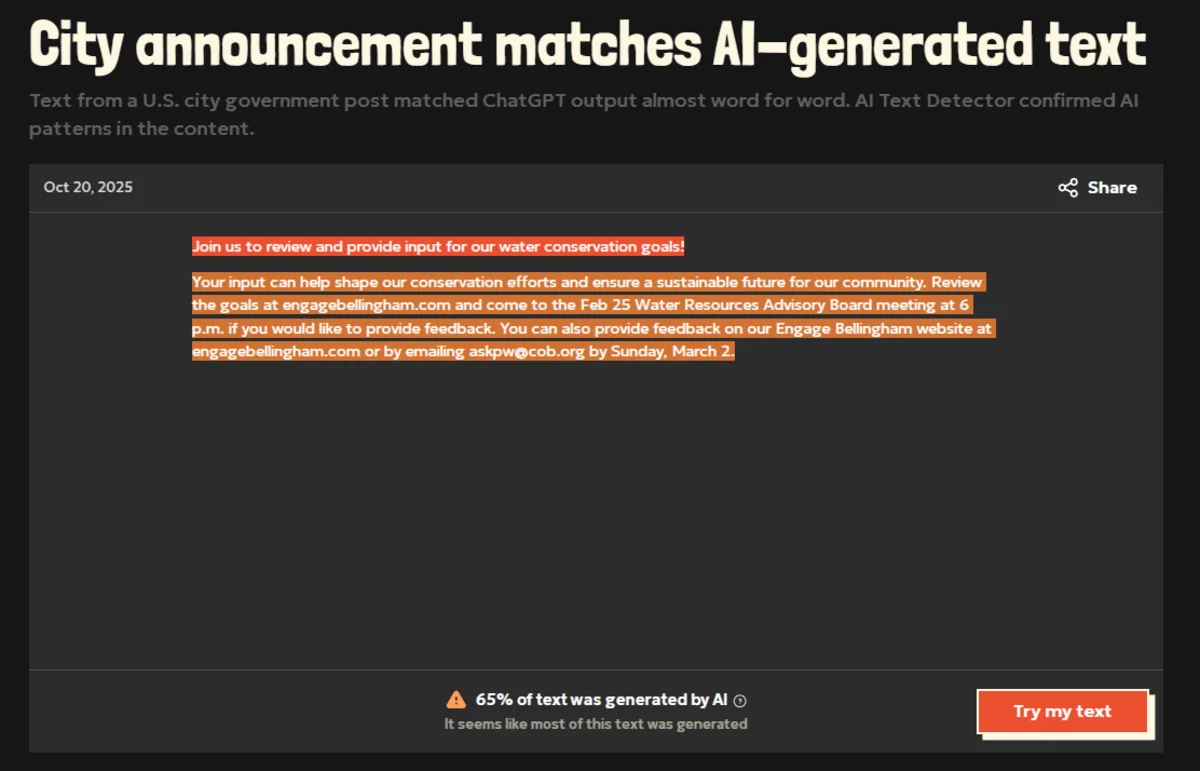

Transparency over mystery

We are cybersecurity guys, so we are not big fans of mystery and yes-or-no verdict with zero explanation other AI detectors give you (Hey, ZeroGPT and GPTZero). So every analysis includes visual evidence:

- highlighted suspicious passages for text,

- heatmaps showing artifact areas in images,

- waveform analysis for audio,

- marked frames in the video.

You see exactly why we flagged something as AI. You're not just trusting our word, just like real detectives we always have some evidence. Besides you get in-depth reports with precise explanations why our models think something is AI, you'll love it.

Real-time processing without data creep

Your content gets analyzed instantly. And as we love privacy, it's up to you to choose whether to share your detection results or hide them.

Continuously evolving detection

We test our models on real world scenarios and openly share our research results. For example take a look at our deep research of 13 Years of BBC content. In this research we wanted to answer how AI influences journalism. That's the kind of work that keeps us ahead of new AI models and emerging deepfake techniques.

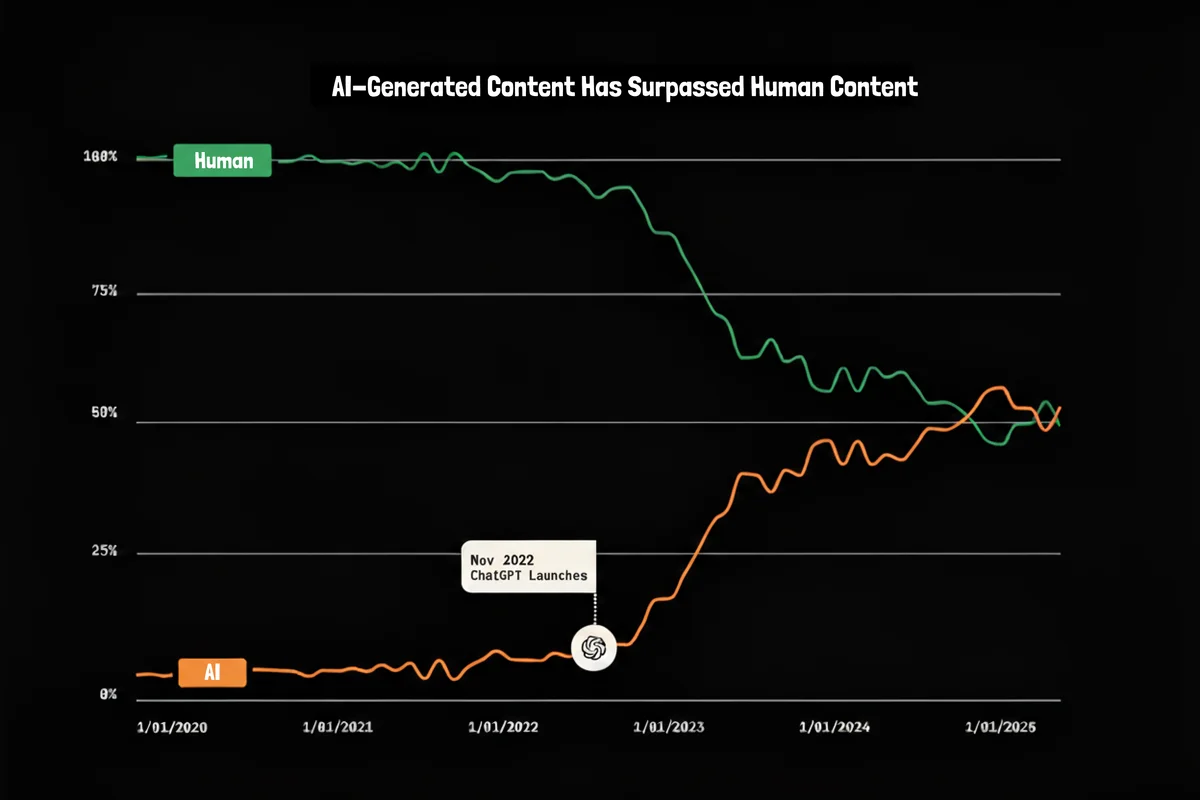

Why AI detection matters right now

The statistics would be funny if they weren't heartbreaking:

- In September 2024, fake video scams happened every five minutes.

- Between July 2023 and 2024, 82 deepfakes targeting politicians surfaced in 38 countries during election seasons.

- In Q1 2025 alone, scammers using convincing deepfakes stole over $200 million globally.

Celebrity deepfake scams cost victims hundreds of thousands each. Meanwhile, educators face constant battles with AI-written assignments, journalists need to verify source content before publishing, and businesses risk brand damage from synthetic media impersonating their executives. And no, scammers aren't getting smarter, but the technology is just getting cheaper and easier.

But what terrifies us most isn't the capability. It's the normalization that began with "it's just a prank", moved on to monetization of fake natural disaster videos on social media, overcame uncanny valley and led us to deepfakes that become so common that people even stop questioning them. AI now is just a part of the feed, and "I can't tell if that's real" becomes an acceptable response. That's why online trust dies.

But we still believe that trust matters, that's why we built isFake.ai. Because people deserve to know what they're looking at. And answering the question "is this real?" shouldn't be so tough.

How does isFake.ai actually work?

Our detection platform combines neural networks trained on millions of real and AI samples with pattern recognition algorithms that catch what the human eye, ear and other senses misses.

AI text detection

When you run text through our AI text detection tool, we're not just checking grammar. Our tool is looking for AI model signatures, while you can notice:

- Overly smooth rhythm and sentence flow

- Repeated word patterns and uniform structures

- Overload of linking words

- Lack of natural variation (what researchers call "burstiness")

- Too stylish quotes, too many em dashes, or colons.

The GPT detector by isFake.ai flags suspicious passages and assigns confidence scores.

You see exactly which sentences triggered detection and why. You can learn more about it in our article devoted to AI text detection.

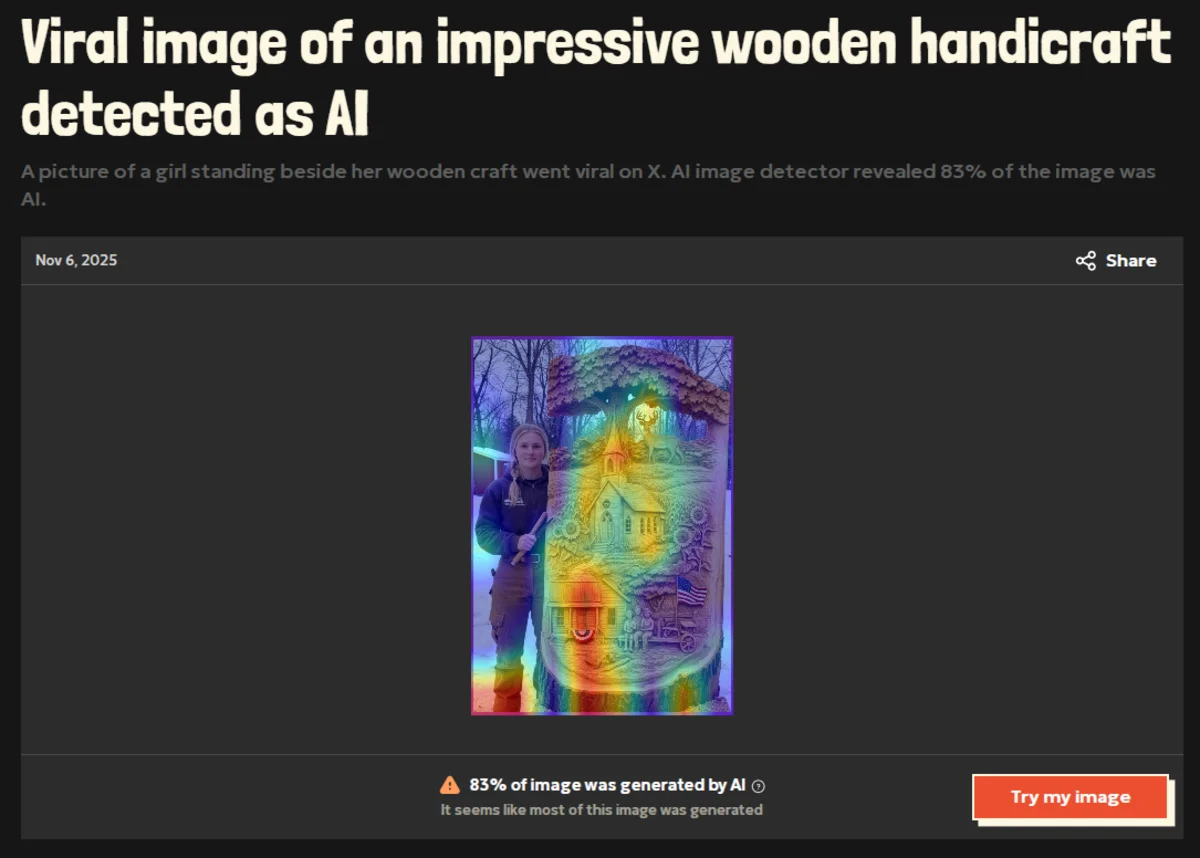

AI image detection

AI image detection tool digs into the digital layers of images and scans them one by one. You've seen these AI signs before, right?

- Extra fingers or impossible hand shapes

- Lighting inconsistencies that don't make physical sense

- Unnatural skin textures that look too "plastic"

- Artifact patterns invisible to human eyes

- Edges that blend unnaturally

Models like Midjourney, Stable Diffusion, and DALL-E all leave fingerprints. Our heatmaps highlight the suspicious areas so you know exactly where the AI struggled. Besides you can see a precise AI signature on every AI enhanced image, just test a couple of pics, and you'll recognize that beautiful pattern.

AI video detection

Deepfakes are scary, because almost every video feels real, until you know what to look for. Try to catch:

- Lip-sync errors that don't line up with audio

- Glitches in lighting or texture

- Unnatural eye movement patterns

- Frame inconsistencies and "melting" backgrounds

- Unreliable narrator (too calm cameraman in the middle of the chaos)

Or, use our AI video detection tool. We mark the suspicious timestamps and flag the exact frames where detection confidence is highest.

AI audio detection

AI audio detection identifies synthetic and cloned voices. Listen closely for:

- Missing natural breathing and vocal pauses

- Overly perfect pronunciation

- Unnatural pitch consistency

- Some kind of strange background sounds

Or just trust us, take a look at how isFake detects AI generated voice.

Getting started with isFake.AI

Step 1. Upload or paste

Choose your content type. Drag-and-drop your file or paste the text to the detector. Oh and no signup required to start testing, so you can play a little to get used to our UX… although it's pretty simple and cute, don't you think so?

Step 2. Instant analysis

Get your results in seconds. Our AI detectors examine patterns, artifacts, and statistical anomalies across your entire submission.

Step 3. Get your report

Instant confidence score (0-100%), visual explanations highlighting suspicious elements, and a detailed breakdown explaining what triggered detection.

To upgrade your account, subscribe to Premium for just $7/month.

And if you are interested in API (why not), you can contact us directly. We'll announce access to it a bit later, so don't miss a chance to be among our first long-term partners.

Who uses isFake.ai and why

Educators & students

We've seen tons of news and posts from students who've been falsely accused in AI-written essays. And we think that it is unfair to call AI a work that was fairly written for hours. We firmly believe that teachers need to assess student work fairly without making false accusations. isFake AI detection platform shows which parts appear AI-generated, whether that's a fully written essay or just editing assistance. Students use it to self-check before submission, understanding what triggers detection flags.

Pro tip from Is and Fake to educators

Use detection as a conversation starter, not a verdict. "This flagged high for AI" opens discussion about research methods and learning. It doesn't prove guilt. That conversation is where education happens.

For Journalists & publishers

Before you publish that viral video or explosive image, verify it. Newsrooms worldwide now use AI video detector and AI image detection tools as standard verification steps. When you're fighting misinformation, detection is defense.

Pro tip from Is and Fake

Build a verification workflow before publication. Check suspicious content through detection tools, cross-reference with trusted sources, and when in doubt, reach out to the original source directly. Add a "verification note" to your articles when AI detection was used in the fact-checking process. Transparency builds trust with your audience.

For businesses & enterprises

Protect your brand from deepfakes impersonating executives. Verify employee submissions and application materials. Detect AI-written marketing content that might violate FTC guidelines. And if you are interested in our enterprise solutions, contact us, we'll help you integrate AI detection into your existing workflows.

Pro tip from Is and Fake

Establish clear policies about what detection means at your company. Document your process. Combine detection with human review. Use it for risk assessment, not automated decision-making.

For anyone, anywhere

You get a suspicious email. A colleague sends a "video" that seems kinda weird. A viral post about a friend looks too dramatic. Instead of wondering, you check. That's the power of making AI detection tool access available to everyone.

Pro tip from Is and Fake

Consider context. Highly polished writing might just be professional writers. Edited AI content becomes harder to detect. Translation effects can trigger false positives.

Accuracy, limitations & honest metrics

We don't claim perfection. There are many AI detectors, but isFake.ai has a clean UI and detailed AI detection reports, let's look at some honest advantages.

What we do well:

- High accuracy on longer texts (500+ words)

- Reliable image artifact detection across common generators

- Effective deepfake video identification

- Multi-language text support

- Crystal-clear visual evidence for every verdict

Where we're honest about challenges:

- Short texts (under 300 characters) are harder to analyze accurately

- Heavily edited AI content becomes harder to detect

- Mixed human-AI collaboration can confuse any detector

- New AI models emerge faster than we can train against (Sorry for Sora, yet)

- False positives happen with highly polished, too structured human writing

We always display confidence scores, show precision and recall metrics. Just so you know, we position ourselves as an advisor, not judge. We encourage human interpretation alongside our analysis.

And we're constantly improving. Our models update continuously to adapt to new AI generators, new detection techniques, and real-world feedback from users.

The isFake.ai research advantage

We're not just building a tool, we're conducting serious research that shapes how the industry understands AI detection.

Our BBC AI Content Analysis project examined 13 years of BBC articles (2012-2025) using our AI text detector. We published the complete dataset open-source on GitHub. Because understanding how major newsrooms use AI helps us build better detection and helps policy makers make informed decisions.

Explore truth with us

Have you seen our Explore page, yet? It's a searchable index of content verified through isFake.ai. Imagine being able to search your browser for "is this tweet AI?" and instantly finding our analysis. This way the truth becomes discoverable.

Soon you'll be able to paste a tweet or TikTok link directly into isFake.ai and get back a detailed report on how much AI generated that content. Just copy, paste and know. Well, and not just confidence scores and highlighted fragments.

Because in 2025, the question isn't "can AI fool us anymore?" It's "can we build the tools to tell the difference?"

We just did.